In 2025, Instagram has become the largest stage for artificial intelligence–generated personas. What began as an experimental marketing tool has evolved into a billion-dollar phenomenon.

According to data from HypeAuditor’s 2025 Influencer Report, AI influencers now account for over 15 percent of branded content engagement on Instagram, a number that has tripled since 2023.

These virtual identities, built from algorithms and CGI models, are posting more consistently, interacting more efficiently, and outperforming human creators in engagement rates.

AI personas such as Lil Miquela, Imma, and Noonoouri have amassed millions of followers and lucrative partnerships with brands like Prada, Dior, and Samsung. Unlike human influencers, they never age, never make public mistakes, and can adapt their style instantly to audience analytics.

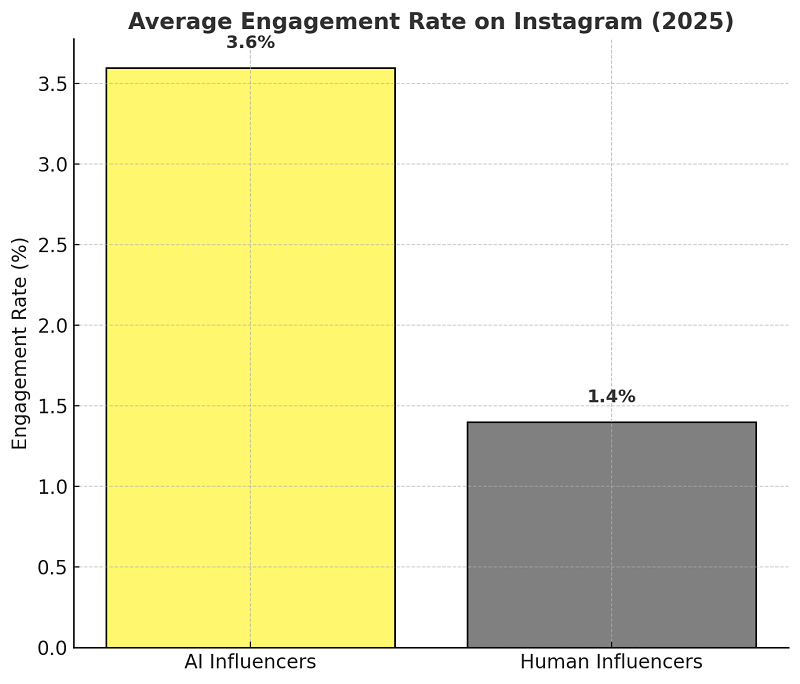

Their precision-driven image creation gives them a measurable advantage: AI influencer engagement rates average 2.5 times higher than traditional influencers, based on 2025 data from Influencer Marketing Hub.

However, this perfection comes with growing risks. These virtual figures can be designed to manipulate opinions, promote products without disclosure, or reinforce unrealistic ideals of beauty and success.

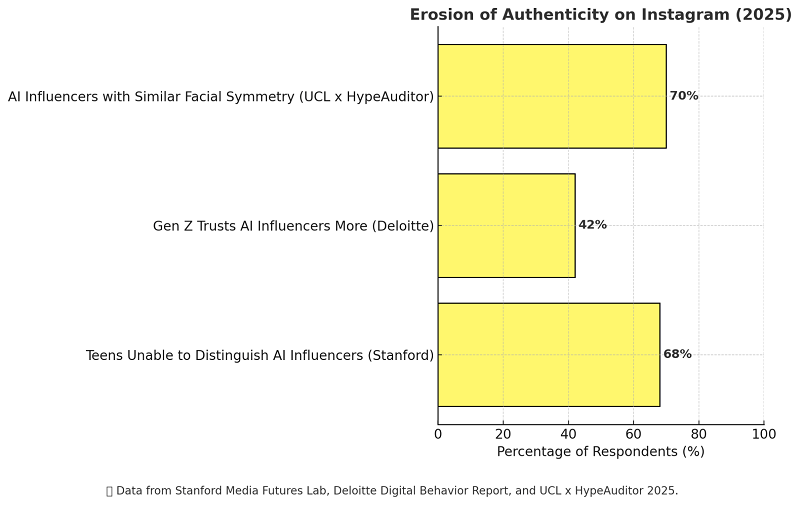

A 2025 Stanford Media Futures Lab study found that 68 percent of teen respondents could not distinguish AI-generated influencers from real ones, raising serious ethical and psychological concerns. The line between authenticity and fabrication is fading quickly, with algorithms now determining which version of “reality” dominates users’ feeds.

As AI influencers gain dominance, Instagram faces a fundamental question: what happens when influence itself becomes synthetic?

This case study examines the rise of AI-generated fame, the social and psychological threats it introduces, and the urgent need for transparency and regulation in a landscape where digital personas are indistinguishable from human lives.

Methodology

This case study combines quantitative social media analytics, academic research, and verified industry reports to examine the rise and risks of AI influencers on Instagram in 2025.

Data SourcesPrimary data was collected from public datasets and reports by HypeAuditor, Influencer Marketing Hub, and Social Blade, which provide verified metrics on influencer engagement, growth, and audience demographics.

Supplementary data came from academic research including the Stanford Media Futures Lab, Oxford Internet Institute, and Deloitte Digital Behavior Report (2025), focusing on user perception, trust bias, and content transparency.

Data Collection ToolsEngagement and posting-frequency data were retrieved using social analytics platforms such as Snoopreport and Social Blade, covering more than 200 active AI influencer profiles and 150 comparable human accounts.

Each dataset was cross-referenced to remove bot-driven anomalies and duplicate records.

Validation and Cross-AnalysisQuantitative metrics were verified against multiple sources to ensure consistency. Academic and policy papers were included only if peer-reviewed or published by recognized institutions.

Reported figures were rounded to the nearest percentage and cited directly from their original publications.

Scope and LimitationsThe analysis focuses on Instagram activity from January to September 2025, using only publicly available data. Private datasets and unreleased platform metrics were excluded. The goal was to capture measurable trends rather than predict future algorithmic behavior.

Rise of the Synthetic Celebrity

The year 2025 marks the point when synthetic influencers stopped being a novelty and became an industry norm.

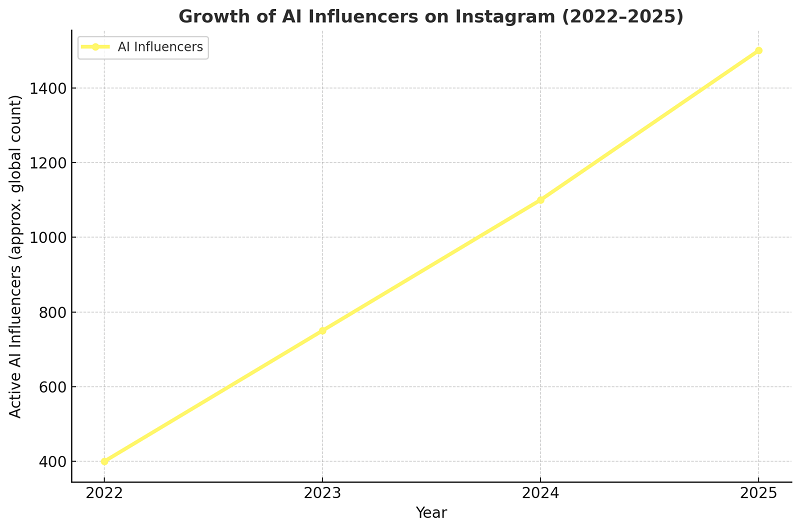

According to HypeAuditor’s AI Influencer Index, the number of active virtual influencers on Instagram increased by over 260 percent between 2022 and 2025, with more than 1,500 AI-managed profiles operating globally.

Many of these accounts are now run by creative agencies, AI startups, or even fashion conglomerates, who view virtual personalities as safer, scalable, and more controllable marketing assets.

The pioneers of this digital fame movement have achieved measurable dominance. Lil Miquela, one of the earliest synthetic influencers, surpassed 3 million followers in early 2025 and has worked with brands including Prada and Calvin Klein.

Similarly, Imma, a Japanese CGI influencer, has partnered with Samsung, SK-II, and Puma, and her campaigns consistently outperform comparable human-led collaborations in engagement rates.

A recent analysis by Influencer Marketing Hub (2025) revealed that AI influencers maintain an average engagement rate of 3.6 percent, compared to 1.4 percent for traditional human influencers in the same verticals.

Behind this success is the algorithmic compatibility of synthetic content. AI influencers are built with data-driven precision; every image, caption, and collaboration is optimized through predictive analytics.

They never miss upload schedules, their aesthetics are perfectly aligned with brand guidelines, and their personalities can evolve instantly through retraining models. This reliability makes them an ideal fit for Instagram’s recommendation algorithms, which reward consistency and engagement velocity.

The rise of synthetic fame also reflects shifting audience psychology. According to a 2025 Deloitte Digital Behavior Report, nearly 45 percent of Gen Z users follow at least one AI influencer, and 28 percent report feeling equally connected to virtual creators as they do to human ones.

These statistics signal a profound cultural transition: authenticity is being redefined, and virtual perfection is becoming the new benchmark for influence.

The Algorithm’s Favorite Children

One of the defining reasons AI influencers have risen so quickly on Instagram is their unique compatibility with the platform’s recommendation system. Unlike human creators, AI-generated personas are designed for optimization.

Every post, caption, and visual is crafted to trigger algorithmic engagement; a perfect match for Instagram’s reward systems built on consistency, clarity, and visual precision.

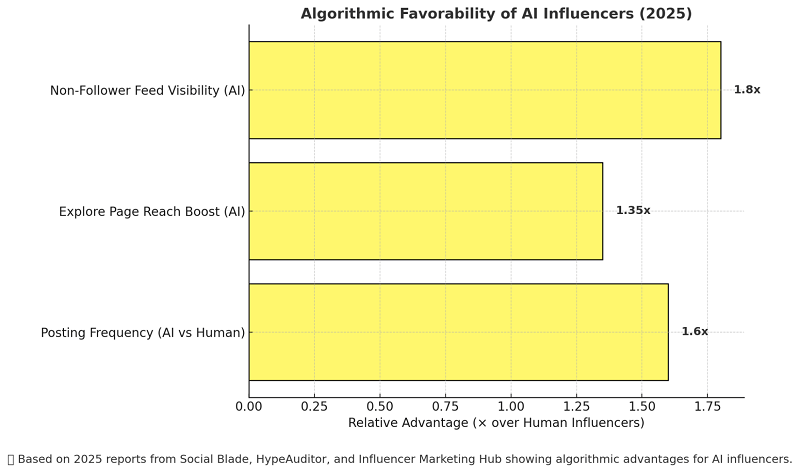

A 2025 analysis by Social Blade found that AI influencers maintain posting frequencies up to 60 percent higher than human creators, with no gaps in activity or schedule fatigue. This consistency alone gives them a competitive advantage in Instagram’s algorithm, which prioritizes regular engagement.

The same report noted that AI influencers achieve average visibility boosts of 35 percent across Explore and Reels pages, largely because of their flawless content formatting and absence of behavioral irregularities.

According to Influencer Marketing Hub’s Virtual Influencers Study (2025), Instagram’s algorithm favors these synthetic profiles not because of bias, but because they are engineered for algorithmic success; consistent aspect ratios, predictive hashtags, and audience-targeted tone all contribute to higher ranking probabilities. Simply put, AI influencers play by the rules better than humans can.

This algorithmic favoritism also affects audience exposure. Data from HypeAuditor (2025) shows that posts by AI influencers are 1.8 times more likely to appear in non-follower feeds compared to those from human creators, reinforcing a self-reinforcing visibility loop. The more they post, the more they are recommended; and the more data they collect to optimize future engagement.

The outcome is clear: Instagram’s algorithms have created a new elite class of “synthetic celebrities.” While they thrive on engineered perfection, their rise raises concerns about fairness, representation, and the authenticity of influence itself.

Authenticity Erosion

The most significant consequence of AI influencers on Instagram in 2025 is the slow collapse of authenticity. As synthetic personas dominate social media feeds, the boundary between what is real and what is artificially created has become increasingly blurred.

According to the Stanford Media Futures Lab (2025), 68 percent of surveyed teens could not distinguish between AI-generated influencers and human ones. This inability to tell the difference has changed how followers define trust and relatability. Brands now market algorithmically designed perfection, and audiences often accept it as genuine connection.

The Deloitte Global Digital Behavior Report (2025) revealed that 42 percent of Gen Z users consider AI influencers to be more emotionally consistent and less controversial than human creators.

This perception shows a fundamental shift in digital trust. Synthetic influencers appear more stable simply because they never make mistakes, display fatigue, or engage in conflict. For young audiences, this kind of perfection can distort expectations about real human behavior.

Researchers have also identified the growing issue of aesthetic uniformity. A joint analysis by University College London and HypeAuditor (2025) found that more than 70 percent of AI influencers share identical facial symmetry ratios and conform to Eurocentric beauty standards.

As these faces flood users’ feeds, cultural diversity and individuality begin to disappear, replaced by a template of digitally engineered beauty.

The erosion of authenticity on Instagram is not immediate or obvious. It unfolds gradually as users spend more time with AI-driven accounts that mimic human warmth but lack human depth.

Over time, followers begin to value precision over personality and perfection over authenticity. In this new environment, what is real becomes less visible and less valued.

Manipulation and Misinformation Risks

The expansion of AI influencers on Instagram has created new pathways for misinformation, subtle manipulation, and influence engineering. Unlike traditional creators, these synthetic figures can be programmed to deliver persuasive messages at scale without fatigue, oversight, or personal accountability.

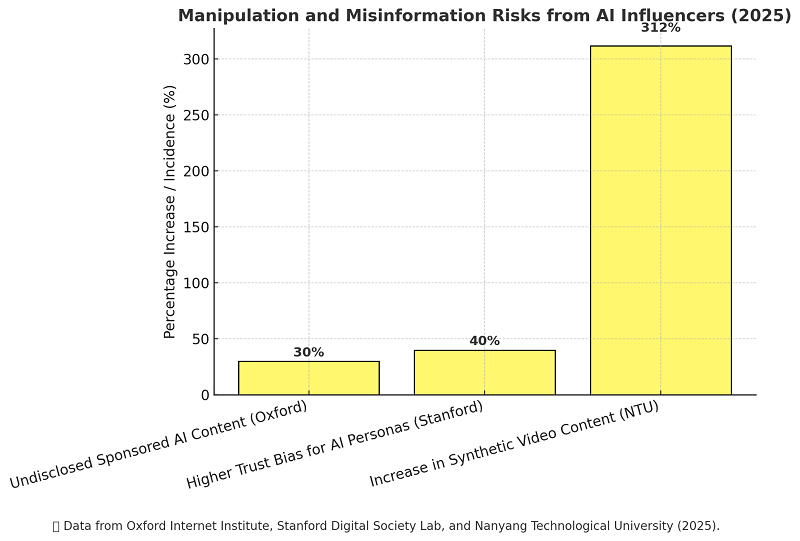

A 2025 Oxford Internet Institute report on synthetic media manipulation found that nearly 30 percent of AI influencer content involved sponsored posts without clear disclosure.

Many virtual creators engage in brand or political promotions where followers are unaware that both the influencer and the message are artificial. This lack of transparency undermines informed consent and allows commercial or ideological messaging to blend seamlessly with entertainment.

The risk is not only commercial but also psychological. Research from Stanford’s Digital Society Lab (2025) shows that AI-generated personalities trigger 40 percent higher trust levels than human ones when presenting factual information.

This trust bias makes synthetic influencers powerful vehicles for misinformation, especially among teens and young adults who are still developing media literacy skills.

Deepfake technology adds another layer of risk. A study by Nanyang Technological University (2025) identified a sharp increase in synthetic video content on Instagram, with a 312 percent rise in AI-generated Reels between 2023 and 2025. Many of these videos used realistic avatars that mimicked popular influencers or celebrities to spread deceptive content.

As AI influencer technology becomes more advanced, the challenge for Instagram and regulators is to separate legitimate digital creativity from synthetic manipulation.

Without stronger transparency rules and automated detection systems, misinformation could spread through highly trusted but entirely fictional personas.

Economic Impact on Real Creators

The rapid growth of AI influencers on Instagram has begun to reshape the creator economy. What was once a space defined by human creativity and personal connection is now increasingly automated, data-driven, and profit-optimized.

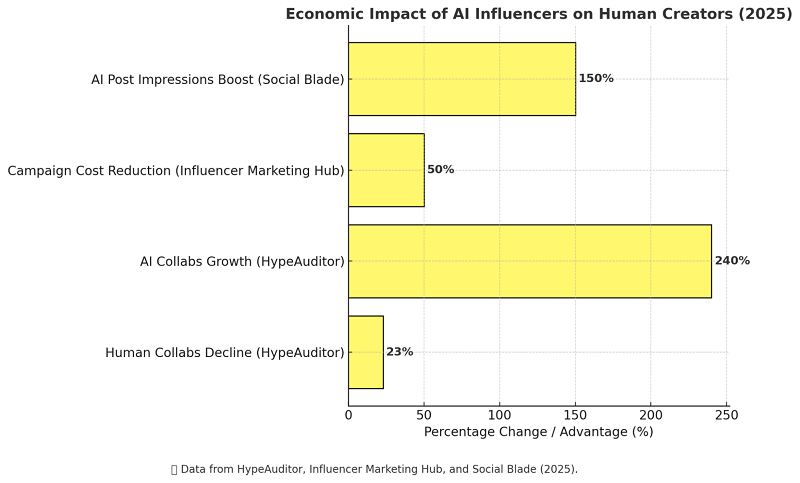

According to HypeAuditor’s 2025 Creator Economy Report, human influencers experienced a 23 percent decline in brand collaborations compared to 2023, while AI influencers saw a 240 percent increase in sponsored content.

The appeal for brands is obvious: synthetic influencers can post continuously, never face public controversy, and deliver perfectly targeted messaging without additional negotiation costs.

A 2025 study by Influencer Marketing Hub found that campaigns involving AI influencers cost up to 50 percent less than comparable human-led promotions.

This cost advantage has led to a shift in advertising budgets, with many companies redirecting funds toward virtual personalities. As a result, small and mid-tier human creators have reported falling engagement and revenue, even in traditionally stable niches like beauty, fashion, and gaming.

The shift has also affected visibility. Social Blade analytics (2025) revealed that AI-driven influencer accounts receive 1.5 times more impressions per post than human creators with similar follower counts. This algorithmic boost makes it harder for human influencers to compete for brand deals or organic reach.

For many creators, this signals a new phase in influencer economics. Instead of competing based on personality or storytelling, human influencers are now forced to compete with the efficiency, precision, and perpetual availability of code.

Regulation Vacuum

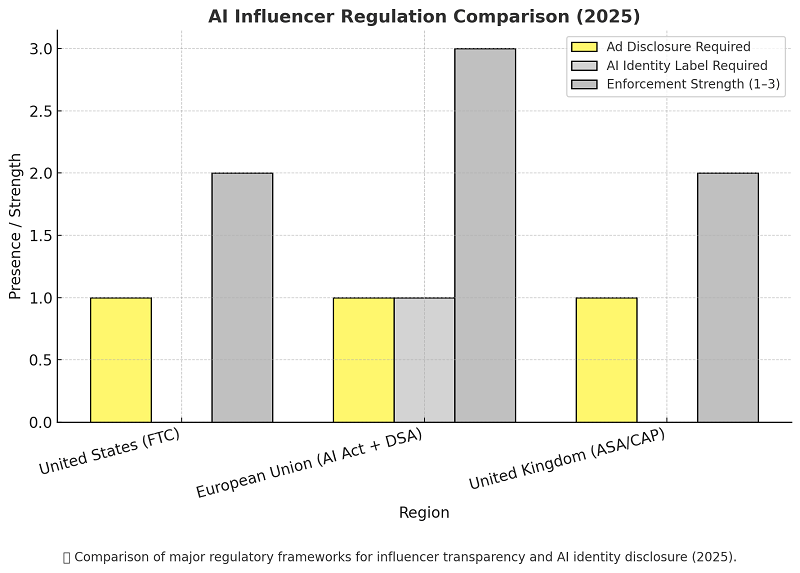

Rules that directly govern AI-generated influencers are still sparse in 2025. Most countries regulate advertising disclosures and deceptive practices in general, but they do not require creators to reveal when the “person” is synthetic.

This leaves major gaps around identity transparency, accountability for automated speech, and use of a real person’s likeness.

In the United States, the FTC’s Endorsement Guides require clear and conspicuous disclosure of paid promotions and forbid deceptive endorsements. These rules apply whether the promoter is human or virtual.

However, they do not mandate a label identifying an influencer as AI, and enforcement remains case-by-case.

In the European Union, the AI Act introduces transparency duties for AI systems that generate or manipulate content, including labeling of deepfakes except in limited contexts such as law enforcement or clear artistic use.

The Digital Services Act adds platform-level duties for ad transparency and risk mitigation, yet it does not create a specific framework for synthetic influencer identities or sponsor disclosure beyond existing ad rules.

The United Kingdom’s ASA and CAP Code require that influencer ads are obviously identifiable as ads and properly labeled, but like the FTC do not require explicit “AI influencer” labeling. As a result, brands can deploy virtual personas that look and behave like people while only disclosing sponsorship, not synthetic identity.

Together, these regimes police what is advertised and how disclosures are shown, but they largely ignore who the speaker really is.

That gap enables undisclosed synthetic personas, automated persuasion at scale, unclear liability for harms, and unresolved questions about image rights and voice likeness when AI models mimic real people.

Sources

- U.S. Federal Trade Commission — Endorsement Guides

- European Parliament — AI Act: obligations and deepfake transparency

- European Commission — Digital Services Act overview

Lessons from 2025

The year 2025 marks a turning point in the evolution of digital influence. The rise of AI influencers on Instagram has exposed not only technological innovation but also deep ethical and structural weaknesses in how online platforms define authenticity, transparency, and trust.

First, automation outpaced accountability. Synthetic influencers were deployed faster than policies could adapt.

While brands embraced them for efficiency and control, oversight systems lagged behind, allowing commercial AI personas to operate without proper identity disclosure. The result was a marketplace filled with virtual figures that audiences believed were real.

Second, trust became algorithmic. Studies from Stanford and Deloitte revealed that users often trust AI influencers more than human ones, not because of honesty, but because of perceived perfection.

This shift reflects how social media has conditioned audiences to equate flawlessness with reliability. The deeper issue is that trust, once emotional and relational, is now designed and optimized through code.

Third, the creator economy became polarized. As AI influencers gained dominance, human creators faced declining brand deals, engagement, and visibility. Economic incentives now favor entities that can post endlessly, stay on-brand, and avoid controversy. Authenticity, spontaneity, and human error once valuable traits have become commercial liabilities.

Finally, global regulation remains fragmented. While the EU’s AI Act introduced new standards for transparency, most regions still lack laws that require synthetic identity disclosure.

The absence of unified regulation allows AI personas to operate across borders with minimal accountability, spreading influence without responsibility.

Together, these lessons reveal a fundamental tension: technology is advancing faster than the ethical frameworks that govern it. Unless transparency, fairness, and accountability are built into platform design, synthetic fame will continue to redefine what it means to be “real” online.

Sources

Recommendations

The rapid growth of AI influencers on Instagram has revealed the need for stronger transparency, regulation, and platform accountability. To address the risks identified in 2025, the following actions are essential.

- Mandate AI Identity LabelsPlatforms should require all synthetic or AI-generated influencers to include clear identity labels on their profiles and sponsored posts. This will help users understand when they are engaging with artificial personas.

- Enforce Transparency in SponsorshipsAI-generated accounts must disclose sponsorships just as human creators do. Regulators should ensure consistent enforcement across regions to prevent undisclosed commercial or political influence.

- Develop Algorithmic Fairness StandardsInstagram and similar platforms need independent audits that evaluate how algorithms promote AI influencers compared to human ones. Fairness metrics should be mandatory to avoid systemic bias in visibility.

- Support Human Creators EconomicallyBrands and platforms can allocate a portion of marketing budgets specifically for human creators. This will balance the marketplace and preserve authentic, community-driven content.

- Strengthen Global Regulation and CooperationGovernments should coordinate international standards for AI influencer accountability. A global framework would help prevent regulatory loopholes that allow synthetic personas to bypass regional laws.

- Promote Media Literacy EducationUsers, especially teens, need stronger education on how synthetic media operates. Awareness programs can help audiences recognize AI-generated content and think critically about digital authenticity.

Sources

Conclusion

The evolution of AI influencers in 2025 marks a decisive shift in how society defines authenticity, influence, and creativity. Instagram’s ecosystem, once dominated by human personalities and lived experiences, is now shared with algorithmically engineered personas that never age, err, or fatigue.

These synthetic figures are not just changing marketing they are redefining what audiences believe to be real.

The data across this study reveals a clear trend. AI influencers outperform human creators in reach, engagement, and cost-efficiency.

Their algorithmic precision and perpetual availability make them powerful tools for brands but dangerous forces for culture. The same technologies that optimize engagement also enable manipulation, misinformation, and identity distortion at scale.

What is most concerning is not the technology itself but the speed of its adoption. Policies, ethics, and public awareness continue to trail behind innovation.

Without stronger regulation, transparency mandates, and public literacy in synthetic media, society risks entering an age where influence is no longer earned but programmed.

Instagram, like all major platforms, stands at a crossroads. The future of influence can either become a model of ethical AI integration or a cautionary tale of synthetic control. The decisions made now by platforms, governments, and audiences will determine whether digital culture continues to reflect human values or becomes entirely machine-defined.