In 2025, Instagram remains one of the most widely used platforms among teens, but mounting evidence shows that its safety features are failing young users.

Despite Meta’s promises of “Teen Accounts” and stricter protections, research and advocacy groups report that harmful content, predatory accounts, and algorithmic risks still reach underage audiences.

Investigations reveal that features meant to shield teens are either ineffective, easy to bypass, or inconsistently applied. This case study examines how Instagram’s safety systems fall short, and the risks these failures create for youth worldwide.

Methodology

This study draws on a mix of independent audits, academic research, advocacy investigations, and platform disclosures:

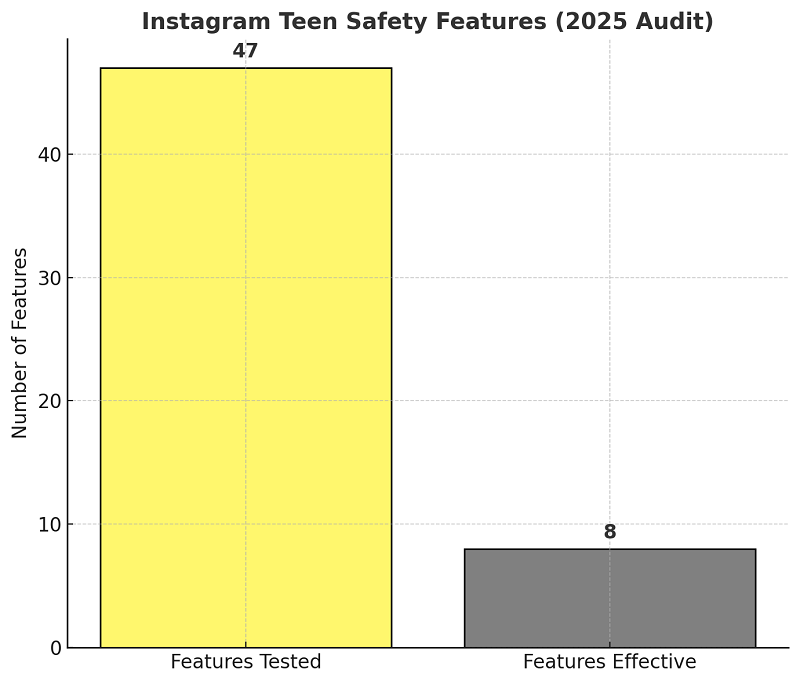

- Investigative Reports: Reuters found that only 8 of 47 tested teen safety features worked as intended (Reuters); Design It For Us and Accountable Tech showed that test Teen Accounts were still exposed to harmful content (DesignItForUs).

- Academic Studies: Algorithm audits found Instagram Reels recommending unsafe content to minors (ArXiv); moderation tests showed harmful videos bypassing filters (ArXiv); and research on teen DMs highlighted ongoing risks around body image and harassment (ArXiv).

- Advocacy Groups & NGOs: Reports by Accountable Tech and the 5Rights Foundation confirmed systemic failures in new Teen Account settings (AccountableTech, 5RightsFoundation).

- Platform & Enforcement Data: Meta announced the removal of 135,000 accounts targeting children in 2025 (Invezz) and rolled out new DM safety tools (SocialMediaToday).

- Policy & Regulation Context: This case study also considers regulatory developments, such as the proposed Kids Online Safety Act in the U.S. (Wikipedia) and age-verification laws worldwide (Wikipedia).

Safety Features vs. Reality

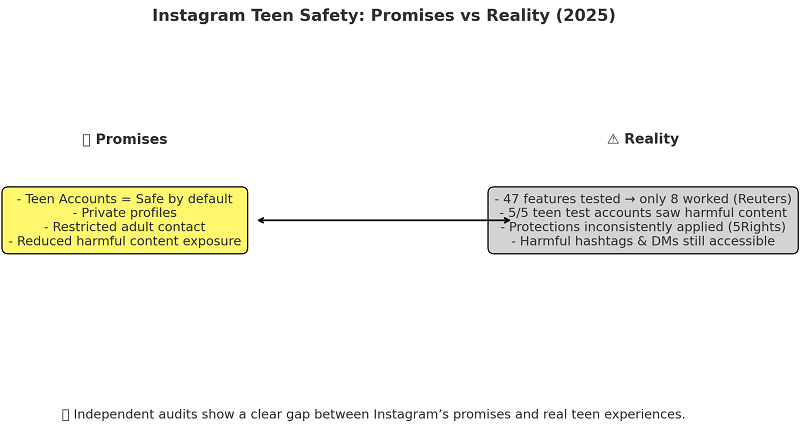

In 2025, Meta positioned its new “Teen Accounts” as a turning point for online safety. The company promised default private settings, restrictions on adult interactions, reduced exposure to sensitive content, and safety prompts in direct messages.

On paper, these measures should have built a safer experience for under-18 users.

The reality, however, tells a different story. Independent tests show that most of these features either fail to activate consistently or are easily bypassed.

A Reuters investigation found that out of 47 safety features evaluated, only 8 worked as intended. Researchers noted that even when safety settings were enabled, harmful content still appeared in feeds and recommendations.

Advocacy groups confirmed these gaps. An audit by Design It For Us and Accountable Tech created multiple Teen Accounts to test protections.

All accounts were still exposed to harmful or distressing content, ranging from eating disorder material to posts glamorizing self-harm. These findings highlight that “Teen Accounts” function more as a marketing promise than as a shield.

The 5Rights Foundation further examined whether Instagram’s protective defaults, such as limited direct messaging and quiet-time prompts, held up in practice. Their conclusion was blunt: safety features are inconsistently applied, leaving many teens just as vulnerable as before.

Even measures like default private profiles often reverted to weaker settings after updates or manual changes.

In short, while Instagram has publicly emphasized its safety efforts, the evidence shows a clear mismatch between promise and performance.

Teens continue to face risks that the platform claims to have addressed, raising questions about whether these safety tools are genuine protections or public relations cover.

Sources

- Reuters — Instagram’s teen safety features are flawed, researchers say (2025)

- Design It For Us / Accountable Tech — Instagram Teen Accounts Report (2025)

- 5Rights Foundation — Teen Accounts Case Study (2025)

Harmful Content Exposure

Despite Instagram’s claims of stronger protections, teens continue to encounter harmful and sensitive material across the platform. The Explore and Reels pages, in particular, remain high-risk areas where inappropriate content surfaces quickly, often within minutes of account creation.

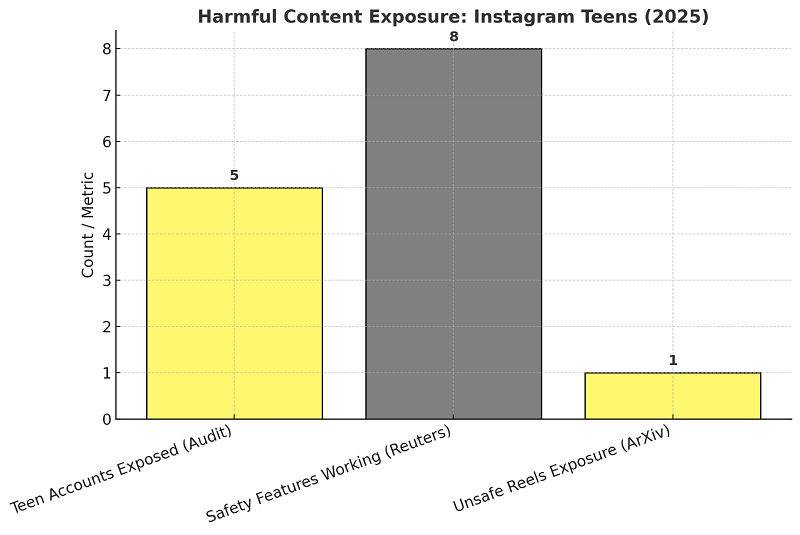

A 2025 audit by Design It For Us and Accountable Tech tested five separate Teen Accounts. All of them were exposed to harmful content in their feeds and recommendations, including posts that glorified eating disorders, promoted self-harm, or encouraged risky behavior. These results show that harmful material bypasses Instagram’s supposed content filters with ease.

Algorithmic audits support these findings. Research published on ArXiv found that Instagram’s recommendation engine frequently promoted unsafe or adult-oriented videos to underage accounts.

The exposure occurred rapidly in some tests, within the first hour of browsing. Instead of reducing risks, Instagram’s algorithm often amplified them by steering teens deeper into harmful content clusters.

Advocacy groups also highlighted how harmful hashtags remain discoverable despite policy bans. Terms associated with body-image pressures, self-harm communities, and sexual exploitation were still suggested or linked through Instagram’s search features.

This loophole means that even when content is officially restricted, teens can find their way into unsafe spaces through related tags and algorithmic nudges.

Taken together, the evidence shows that Instagram’s safety mechanisms do not meaningfully shield young users from harmful exposure. Instead, design flaws in recommendations and search features actively heighten the risks.

Sources

- Design It For Us / Accountable Tech — Instagram Teen Accounts Report (2025)

- ArXiv — Catching Dark Signals: Unsafe Content Recommendations for Children & Teenagers (2025)

- ArXiv — Protecting Young Users on Social Media: Evaluating Content Moderation (2025)

Predatory Risks and Messaging Loopholes

One of Instagram’s most publicized promises in 2025 was to restrict adult-to-teen interactions. Teen Accounts were designed so that adults could not freely message or follow underage users, and teens would receive prompts to block or report suspicious accounts.

On the surface, this should have created a strong barrier against predatory behavior.

However, independent reports show that these safeguards fail in practice. Advocacy group Accountable Tech found that adult strangers were still able to follow and attempt to message teen accounts, even when safety features were enabled.

In some cases, the system allowed “follow requests” that bypassed messaging restrictions, creating openings for unsolicited contact.

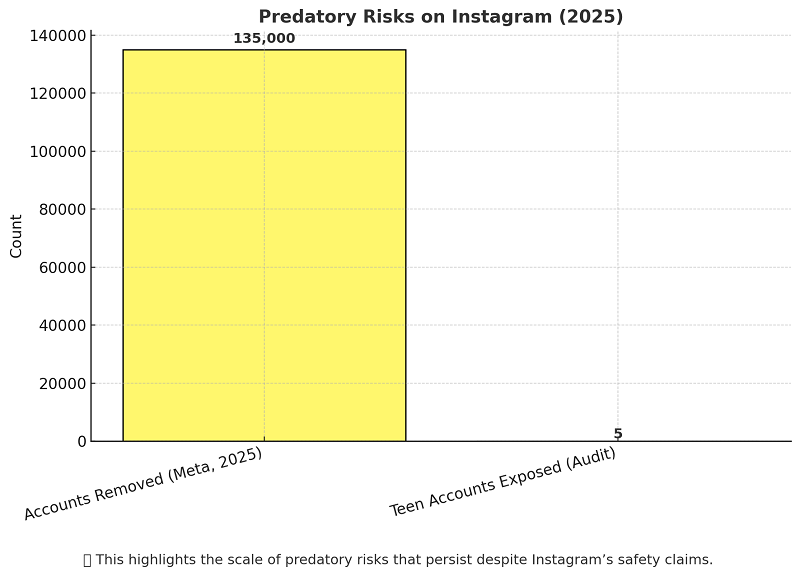

Meta itself admitted the scale of the problem in 2025 when it announced the removal of 135,000 Instagram accounts targeting children.

While this enforcement action shows that harmful actors are being detected, it also demonstrates how many predators already operate on the platform before removal.

Academic studies further reinforce the risks. Research into Instagram’s direct messaging system revealed ongoing exposure to harmful interactions around body image, shaming, and harassment among teens.

Despite Instagram’s automated prompts warning users about “potentially unsafe” messages, teens were still able to access and engage with harmful content in private spaces.

The evidence is clear: while Instagram’s policies are designed to limit predatory contact, loopholes in implementation and enforcement continue to expose young users to real risks.

Sources

- Accountable Tech — Scary Feeds: The Reality of Teen Accounts (2025)

- Invezz — Meta removes 135,000 Instagram accounts targeting children (2025)

- ArXiv — Unfiltered: How Teens Engage in Body Image and Shaming in DMs (2025)

Algorithmic Failures

Perhaps the most troubling risk to teen safety on Instagram in 2025 comes from its recommendation systems.

Instagram’s algorithm, designed to maximize engagement, frequently pushes content that is unsafe, addictive, or harmful, even to accounts clearly labeled as belonging to teenagers.

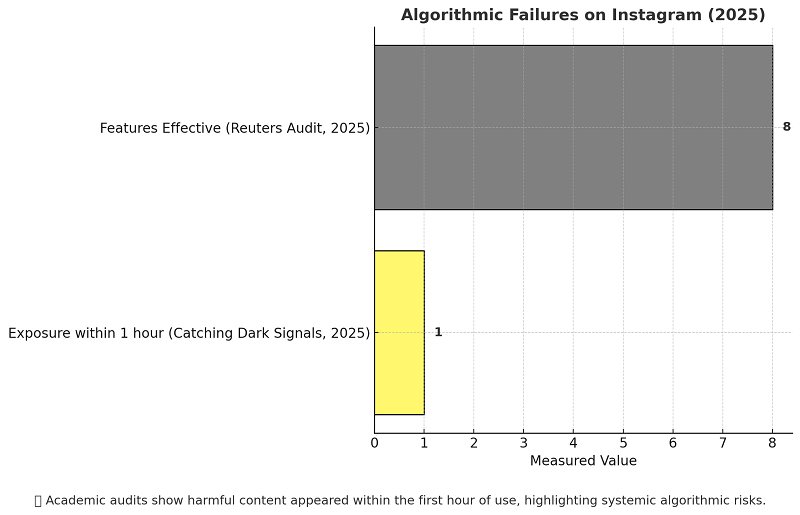

An academic study titled “Catching Dark Signals” found that Instagram’s Reels recommendations regularly promoted explicit or unsafe videos to underage test accounts.

Alarmingly, the exposure often occurred within the first hour of use, demonstrating how quickly algorithms can guide teens into harmful content spirals.

Another study, “Protecting Young Users on Social Media”, compared Instagram to other platforms and revealed that Instagram’s moderation system was inconsistent in filtering harmful video content.

In multiple test scenarios, teen accounts were still served content linked to eating disorders, violence, and sexual material.

The problem lies not only in what the algorithm recommends, but in how it amplifies risks. Once a teen account interacts with or lingers on a harmful video, the algorithm tends to recommend more of the same, creating a feedback loop.

This design flaw turns momentary exposure into long-term risk, trapping teens in cycles of unsafe content.

These findings highlight a systemic issue: Instagram’s algorithms prioritize engagement and watch time above safety. Without structural changes, the recommendation engine itself becomes a driver of harm for young users.

Sources

- ArXiv — Catching Dark Signals: Unsafe Content Recommended for Children & Teenagers (2025)

- ArXiv — Protecting Young Users on Social Media: Evaluating Content Moderation (2025)

Mental Health Concerns

Beyond direct safety risks, Instagram continues to play a significant role in shaping teen mental health, particularly around body image and self-esteem.

Despite Meta’s assurances that Teen Accounts limit exposure to sensitive material, evidence in 2025 shows that harmful content still reaches young users through posts, hashtags, and direct messages.

A study published on ArXiv titled “Unfiltered: How Teens Engage in Body Image and Shaming Discussions via Instagram DMs” found that private messaging spaces remain a hub for harmful exchanges.

Researchers documented instances where teens were exposed to conversations promoting unrealistic body ideals, body shaming, and even encouragement of eating disorder behaviors. The study emphasized that Instagram’s safety prompts inside DMs were insufficient to prevent harmful interactions.

Advocacy groups like the 5Rights Foundation also reported that harmful hashtags and communities promoting unhealthy lifestyles continue to circulate. Even when Instagram blocks certain terms, teens can find related tags or coded language that evade filters.

This loophole leaves vulnerable users exposed to content that normalizes unhealthy or unsafe practices.

The psychological effects of these exposures are well documented: increased anxiety, body dissatisfaction, and a higher risk of disordered eating behaviors. Given Instagram’s visual-first design, its impact on teen self-image is particularly pronounced.

While the platform highlights wellness campaigns and safety guides, the evidence shows that algorithmic amplification of “perfect” aesthetics continues to outweigh positive messaging.

In summary, Instagram’s failure to address body image risks has left a lasting mental health toll on its youngest users. Without stronger safeguards, these concerns will persist, reinforcing cycles of self-doubt and harmful comparison.

Sources

- ArXiv — Unfiltered: Teens & Body Image in Instagram DMs (2025)

- 5Rights Foundation — Teen Accounts Case Study (2025)

The Role of Influencers

Influencers remain central to Instagram’s ecosystem, shaping the trends, aesthetics, and conversations that teens engage with daily.

In theory, large creators could help normalize positive behaviors. In practice, 2025 evidence shows that influencer-driven content often amplifies the very risks Instagram claims to be reducing.

Researchers and advocacy groups have documented how popular accounts contribute to cycles of body image pressure, consumerism, and risky behavior. Even when content does not explicitly promote harm, influencers frequently present curated, idealized lifestyles that teens attempt to replicate.

For younger audiences, this creates patterns of harmful comparison and unrealistic expectations.

The 5Rights Foundation highlighted how Teen Accounts were still exposed to influencer-driven content promoting unhealthy body standards and trends, despite Instagram’s claim that such material was filtered out.

Similarly, the Design It For Us audit reported that harmful hashtags often intersected with influencer posts, magnifying their reach.

Another challenge is that influencers often operate at a scale that makes meaningful moderation difficult. With millions of followers and high engagement, their content bypasses detection faster than smaller accounts.

Academic audits of Instagram’s recommendation systems found that influencer-driven Reels were more likely to surface in teen feeds, even when tagged with sensitive or restricted content.

This shows that the influence of large creators is double-edged: while they can inspire creativity and connection, they also serve as powerful vectors for normalizing harmful standards.

Without stricter moderation and accountability measures, influencers will continue to shape risk patterns for teens on Instagram.

Sources

- 5Rights Foundation — Teen Accounts Case Study (2025)

- Design It For Us / Accountable Tech — Teen Accounts Report (2025)

- ArXiv — Catching Dark Signals: Unsafe Content Recommended for Children & Teenagers (2025)

Broken Promises

Meta has repeatedly assured parents, regulators, and users that Instagram is safe for teens. The launch of Teen Accounts in 2025 was framed as a major step toward protecting young people, with promises of default privacy, reduced contact from adults, and stronger filters for harmful content.

However, independent investigations consistently show a gap between what was promised and what teens actually experience. Reuters reported that of 47 safety features tested, only 8 functioned as intended.

The Design It For Us and Accountable Tech audits confirmed that all teen test accounts were still exposed to harmful or distressing content. Similarly, the 5Rights Foundation found that Teen Account protections, such as “quiet time” or private profiles, were inconsistently applied or easily bypassed.

The contrast between Meta’s public messaging and the evidence from watchdogs raises a question of trust. While Instagram highlights the number of accounts it removes or features it rolls out, the core risks are. harmful content exposure, predatory contact, and algorithmic failures remain unresolved. For teens, the safety net is far weaker than advertised.

Sources

- Reuters — Instagram’s teen safety features are flawed (2025)

- Design It For Us / Accountable Tech — Teen Accounts Report (2025)

- 5Rights Foundation — Teen Accounts Case Study (2025)

Policy and Regulation

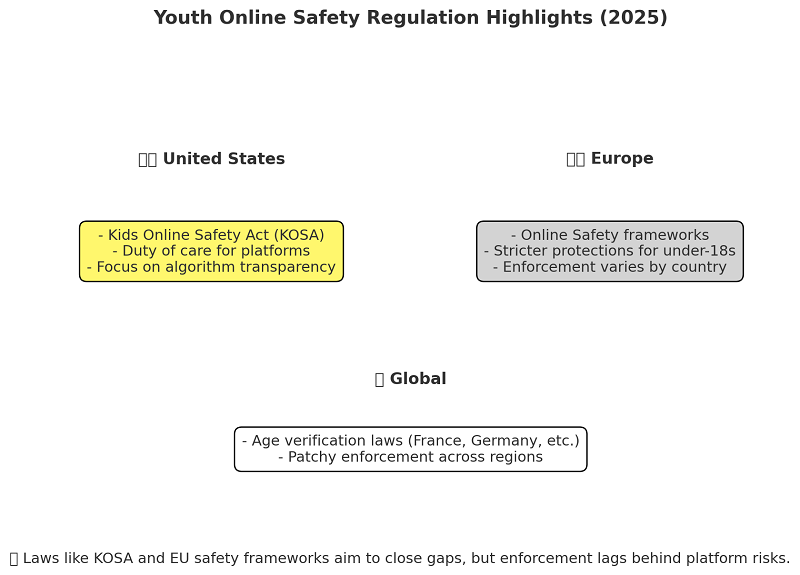

The shortcomings of Instagram’s safety features have intensified calls for stronger oversight. Regulators worldwide are pressing for accountability, with 2025 marking a critical year for youth online safety legislation.

In the U.S., the Kids Online Safety Act (KOSA) has gained momentum. The bill seeks to impose a legal duty of care on platforms, requiring them to prevent exposure to harmful content and provide greater transparency around algorithms.

Advocates argue that Instagram’s failures highlight exactly why such regulation is needed.

Globally, several countries have pursued age-verification laws to restrict under-18 access to risky features.

The UK and EU have advanced online safety frameworks mandating stronger protections, while nations like France and Germany have explored stricter penalties for non-compliance.

However, enforcement remains patchy, and many laws lag behind the evolving tactics of platforms and harmful actors.

Meta, for its part, has responded defensively. Announcing high-profile account removals (135,000 predator accounts in 2025) and rolling out incremental features like DM safety tips.

Yet, without external pressure, these actions remain reactive rather than systemic. Experts warn that relying on voluntary fixes has already failed; only regulation can close the gap between promises and real-world protections.

Sources

- Wikipedia — Kids Online Safety Act (KOSA)

- Wikipedia — Social Media Age Verification Laws by Country

- Invezz — Meta removes 135,000 predator accounts (2025)

Key Lessons

The evidence gathered across audits, research, and policy reviews in 2025 makes one point clear: Instagram’s teen safety strategy is deeply flawed. Several systemic failures stand out:

- Weak Defaults Don’t Protect Teens

Even with Teen Accounts, default privacy settings and safety prompts are inconsistently applied. Protections that should be automatic often fail to activate, leaving young users exposed. - Algorithms Work Against Safety

Recommendation systems designed to maximize engagement quickly funnel teens toward harmful content. Once exposure happens, the algorithm amplifies it, creating a cycle of risk rather than protection. - Reactive Moderation Isn’t Enough

While Meta highlights actions like removing 135,000 predator accounts, these measures happen after harm has already occurred. Without proactive safeguards, enforcement lags behind threats. - Promises Outpace Reality

Instagram continues to overstate the strength of its teen protections, creating a trust gap between what is marketed and what watchdogs uncover.

Taken together, these lessons underscore a critical truth: Instagram’s current model prioritizes growth and engagement over meaningful safety. Without structural reform, teens will remain vulnerable on the platform.

Sources

- Reuters — Instagram’s teen safety features are flawed (2025)

- ArXiv — Catching Dark Signals: Unsafe Content Recommended for Children & Teenagers (2025)

- Invezz — Meta removes 135,000 predator accounts (2025)

Recommendations

The evidence from 2025 shows that Instagram’s existing protections are inadequate. To truly safeguard young users, a shift in both design and accountability is required. The following steps are critical:

- Stronger Default Protections

Teen Accounts should activate all safety features by default, private profiles, limited contact, and restricted hashtags, with no option to disable them until users reach adulthood. - Transparent Algorithms

Meta must disclose how its recommendation systems are tested for safety and allow independent audits to ensure harmful content isn’t being amplified. - Proactive Moderation

Instead of celebrating predator account removals after the fact, Instagram should deploy proactive detection systems that prevent harmful contact before it happens. - Stricter Influencer Accountability

Influencers with teen-heavy audiences should be required to follow stricter content guidelines, with penalties for promoting unsafe trends or harmful aesthetics. - Independent Oversight

Relying on self-regulation has failed. Independent third-party audits and legal obligations (through measures like KOSA) must hold Instagram accountable for safety claims.

Sources

- Design It For Us / Accountable Tech — Teen Accounts Report (2025)

- ArXiv — Catching Dark Signals: Unsafe Content Recommended for Children & Teenagers (2025)

- Wikipedia — Kids Online Safety Act (KOSA)

Conclusion

Instagram remains one of the most influential platforms for teens, but in 2025 its safety systems are still falling short. Investigations show harmful content, predatory risks, algorithmic failures, and influencer-driven pressures persist despite Meta’s public assurances.

While the company emphasizes account removals and incremental features, these efforts are reactive and insufficient. The platform’s design continues to prioritize engagement over protection, exposing teens to risks that undermine both their mental health and their safety.

The lessons are clear: weak defaults, unsafe algorithms, and broken promises cannot be fixed by self-regulation alone.

Real change requires stronger protections, independent oversight, and enforceable regulations. Until then, Instagram will remain a space where teens are more vulnerable than safe.